LLM Math: Why 99.9% Right is Still 100% Wrong

“LLM math is close enough. And it’s getting better!”

I hear this a lot when developers (or investors) talk about LLM-generated calculations. And I get it. If all you need is a quick, “general ballpark” answer, close enough feels fine. Use it, iterate, do the hard math later.

Two problems with that: 1. In regulated industries, there is no “close enough.” There’s correct, and there’s wrong. And wrong gets you audited, fined, or sued. And 2. The potential to drive AI workflows deep into the enterprise is amazing. And stuck at “close enough.”

The customer call I dreaded the most

For most of my career, I handled customer service. In the early days I took phone calls at an 800 number and then over the last 15 years it was email only. I generally enjoy talking with customers. I often get insight I wouldn’t get otherwise. But there was always one call I dreaded: your numbers are wrong.

The good news is PowerOne was rarely wrong. We spent years honing that engine to make sure the answers matched popular HP and TI calculators. Sometimes a customer would say the answer doesn’t match Excel, which was often true since Excel has issues with some financial math since Microsoft decided backward compatibility to Lotus 1-2-3 and VisiCalc was more important. Other times it was a user mistake. Other times a place where there is no mathematical consensus involving a “correct” result.

But what I do know is that being wrong was the death of PowerOne calculator, so best not be wrong.

We have to get LLM Math right

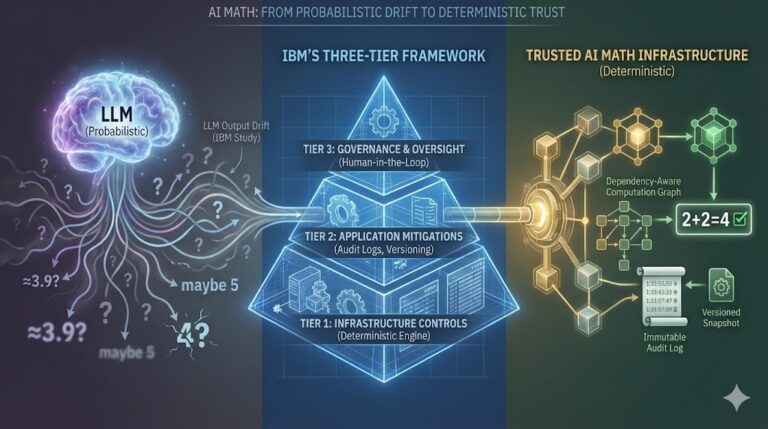

Let’s be clear: we will not claim the full benefit of natural language computing, or other probabilistic AI capabilities, until we can deliver reliable, auditable calculations at scale.

I’ve spent decades building calculators for professionals: mortgage brokers, construction professionals, medical professionals, engineers, real estate investors, and so on. In my experience how clever AI is is beside the point if they can’t trust the numbers.

When a loan officer shows a client an amortization schedule, that schedule has to be right. Not 99.9% right. Right.

When an insurance underwriter calculates a premium, the premium has to match the formula on file. Exactly. Because there’s a regulator somewhere who might ask.

But just getting LLM Math right isn’t enough

Every calculation needs to be auditable, repeatable, and traceable. Business logic needs to be stored and versioned.

This is what production means in high-trust industries. It’s not about throughput or latency. It’s about certainty. Can you prove the answer is correct? Can you show your work? Can you reproduce it six months from now?

LLMs can’t do any of that. They’re black boxes that produce plausible outputs. Plausible isn’t auditable. Plausible isn’t repeatable. Plausible isn’t compliant.

If you’re building for fintech, proptech, or insurtech, you need math you can stand behind. That’s what we’re building at TrueMath.

Reach out: elia.freedman@truemath.ai

Learn more: truemath.ai

Sign up for early access: https://app.truemath.ai/signup