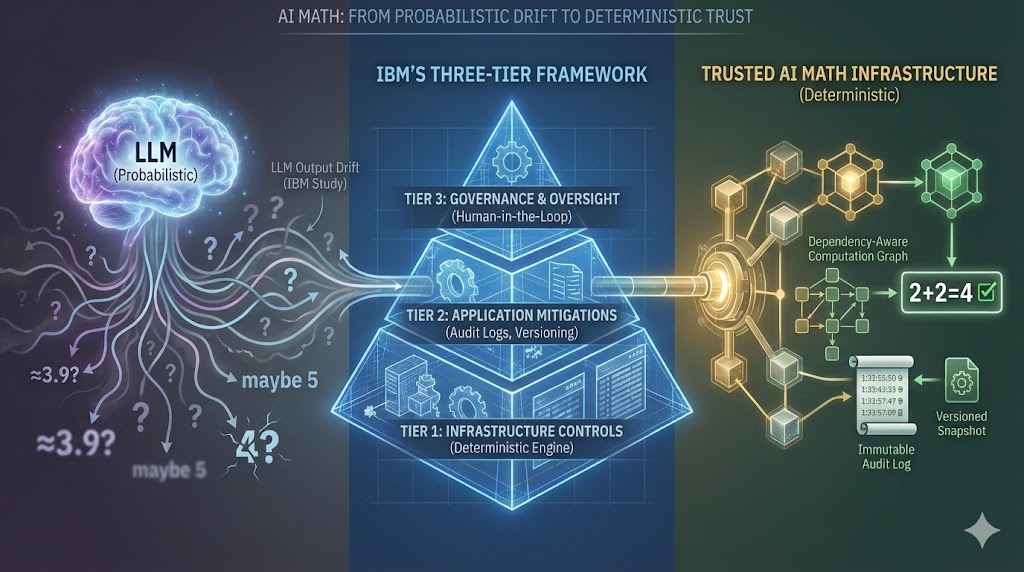

Why AI Math Needs Deterministic Infrastructure: Lessons from IBM’s LLM Output Drift Study

LLMs Are Powerful — But Unreliable for Math

Large Language Models (LLMs) have unlocked a new era of productivity: summarizing documents, generating code, writing policy memos, and answering complex questions with ease. But when it comes to mathematical reliability, their probabilistic nature becomes a liability.

A new study by IBM Research — LLM Output Drift: Cross-Provider Validation & Mitigation for Financial Workflows — offers one of the most precise diagnoses yet. Even under controlled conditions, the same prompt produced different outputs across LLM providers, and even within the same provider over time.

For AI to earn a place in finance, insurance, real estate, healthcare, and other regulated domains, numerical answers must be consistent, traceable, and compliant. And that means rethinking how math is done inside AI systems.

IBM’s Three-Tier Framework for Trustworthy AI Outputs

IBM’s paper outlines a three-tier mitigation framework — essentially a technical checklist for any AI system expected to handle math in production.

Tier 1: Infrastructure Controls

- Deterministic math execution

- Validation across different model providers

- Prevention of configuration drift over time

Tier 2: Application-Level Mitigations

- Version-controlled calculation history

- Immutable audit logs and metadata

- Output replay for consistency and debugging

Tier 3: Governance & Oversight

- Human-in-the-loop fallback mechanisms

- Compliance with regulatory reporting requirements

- Transparency into logic, assumptions, and changes

These are not theoretical features. They’re emerging as baseline infrastructure requirements for AI workflows where accuracy, reproducibility, and auditability are non-negotiable.

What Trusted AI Math Infrastructure Looks Like

Meeting IBM’s mitigation framework means adopting an architecture that separates natural language reasoning from mathematical execution. In practice, that means building (or integrating) a system with the following characteristics:

Deterministic Math Engine

Results must be the same every time, regardless of LLM provider, prompt variation, or session state. Probabilistic guessing is not acceptable for calculations that drive decisions or compliance.

Dependency-Aware Computation Graph

Rather than one-off equations, the system should resolve answers through a graph of variables and formulas, enabling backsolving, multi-step derivation, and reuse of intermediate results across domains.

Versioned Calculation Snapshots

Every result should be anchored to a specific logic version and context — including default values, business rules, and formulas. This ensures historical reproducibility and regulatory defensibility.

Immutable Audit Logs

Each answer must come with a complete provenance trail: inputs used, defaults applied, paths chosen, and any values excluded or overwritten. This is essential for review, debugging, and audit scenarios.

Structured, API-Ready I/O

Inputs and outputs should be machine-readable (e.g. YAML or JSON), allowing integration with LLM agents, no-code tools, orchestration frameworks, and third-party platforms.

Business Logic Stored by Context

The system must support custom business logic at multiple levels — industry-wide defaults, company-specific rules, or client-specific constraints — and enforce them deterministically across workflows. This unlocks true multi-tenant, governed AI systems.

Why Constraint Isn’t Enough — and What Comes Next

Some small LLMs, under tightly controlled configurations, can approximate deterministic behavior. But doing so requires heavy infrastructure support — fixed seeds, retrieval controls, prompt standardization — and even then, it’s still probabilistic under the hood.

Rather than forcing a non-deterministic model to behave deterministically, it’s often better to delegate execution to a system that is deterministic by design. Let the language model do what it does best — interpret — and let a purpose-built execution layer handle what must be exact.

This isn’t just an engineering tradeoff. It’s a safety choice.

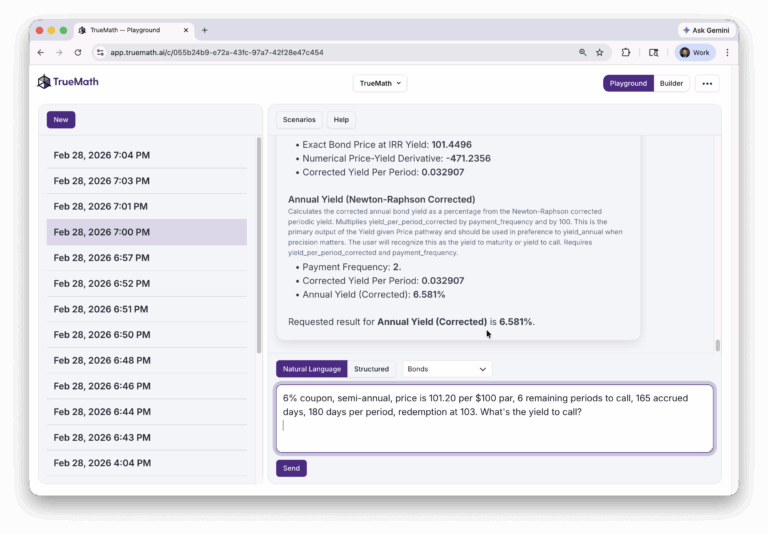

This Architecture Exists — and It’s Operational

IBM’s framework is visionary — but it doesn’t have to stay in the whitepaper phase. This kind of infrastructure already exists in the real world. That’s what we’ve built at TrueMath.

TrueMath solves the problems of AI math by becoming the deterministic, auditable math engine that supports LLMs. We’re to AI math what Stripe is to payments or Cursor is to code: foundational infrastructure that removes risk, accelerates delivery, and scales with the intelligence of the systems built on top of us.

The Bottom Line

AI can now understand what to do. But when correctness is non-negotiable, understanding isn’t enough — the system must prove how it did it.

As the IBM study makes clear, LLMs drift. Trust doesn’t. That’s why future-ready AI systems will rely on an execution layer built for reproducibility, governance, and scale.

And that layer is no longer theoretical. It’s here.

Reach out: bill.kelly@truemath.ai

Learn more: truemath.ai

Sign up for early access: https://app.truemath.ai/signup